All of my opinions are italicized and sources are in blue.

The LG Signature OLED T is a culmination of all the work the company has done in the last few years all rolled into one product. It uses the rollable screen technology of the LG Signature OLED R combined with the wireless connectivity box found in last year’s LG M3 OLED. The result is a TV that feels utterly futuristic.

When you’re not using it, the LG OLED T can display fun screen savers or display the weather, but by pressing a button on the remote, you can unfurl a matte black panel that covers the back of the screen. Doing so allows you to take full advantage of the OLED panel’s deep, rich black levels and well-saturated colors.

This TV isn’t for people seeking the highest specs or the finest OLED-watching experience. It’s for people who want to show off a one-of-a-kind TV and blow people’s minds at dinner parties. This won’t be a bestseller, but the TV should finally bring transparent display technologies to a consumer product.

LG says the see-through TV will be available this year but hasn’t shared pricing.

Samsung’s transparent microLED is a prototype display that will, one day, make its way into Samsung’s TV lineup. That’s what happened to Samsung’s The Wall, another microLED TV that started off as a concept and then became available to buy, and it will one day happen for this microLED, too.

So what makes this one special? MicroLED, in case you missed it, uses billions of small LEDs to power the screen. With such a high density, the LEDs can produce a brighter, more colorful image. Adding a transparent panel to it allows you to get some pretty neat effects like fireworks floating in mid-air.

It’s important to differentiate Samsung Display from Samsung Mobile. The Display team is focused on testing the limits of OLED panels, which includes stress testing and developing concept devices that showcase display capabilities. We’ve seen all sorts of prototypes come from Samsung Display over the years, but it’s usually the flexible phones that catch our attention.

This year, Samsung Display has two new concepts: The Flex Liple and Flex In and Out. Liple is an attempted portmanteau of “light” and “simple,” since the clamshell device looks a bit more minimalistic than the Galaxy Z Flip 5. It ditches an outer display for a smidge of spillover.

The top of the Flex Liple’s internal display extends over the top edge of the device, offering a sliver of viewable screen when it’s closed. The idea is that you can see a quick glance at information, such as the time, local temperature and notification alerts.

Meanwhile, the Flex In and Out takes more of a maximalist screen approach. In addition to folding open like a typical clamshell, it bends completely in half towards the other direction. I’m not sure why I’d need my smartphone to be this flexible, but it’s certainly an interesting concept. I also like how, when it’s folded in, a portion of the interior screen is still visible for offering an abbreviated look at useful information.

Then there’s the Rollable Flex, which is a device that we’re not sure how to describe. Samsung clearly doesn’t know how to describe it either since it simply calls it “a unique device.” Regardless, the Rollable Flex has an expandable display that can become up to five times larger than its original size.

Rollable Flex is far from the first rollable display concept, but the cool part is in Flex Hybrid devices. These products can fold and extend, which seems to be a superior way to provide a lot of screen space in a compact form factor. However, all those moving parts might be worrying from a durability and repair perspective.

As reported by Sammy Fans,

Samsung Display is the first to publicly introduce an RGB version of OLEDoS (OLED on Silicon). OLEDoS are high-definition displays with small pixel sizes, achieved by applying organic materials to silicon wafers. These displays will play a crucial role in XR headsets, which are growing in popularity.

This will be the highest resolution RGB OLEDoS display when compared to what is available in the industry currently. Despite its small size of 1.03 inches, it boasts a pixel density of 3500 PPI, comparable to a 4K TV.

RGB OLEDoS utilizes red, green, and blue OLEDs on a silicon wafer to generate colors without the need for a separate light source. eMagin will showcase its products at the event, including its OLEDoS-enabled military helmets and night vision goggles.

As reported by Sam Mobile,

Samsung unveiled its new QD-OLED TVs: S85D, S90D, and S95D. They feature higher brightness, better colors, and several AI-powered features to improve picture quality. Some of those TVs use Samsung Display’s third-generation QD-OLED panels, and the company has now detailed them.

Samsung’s third-generation QD-OLED panels can be used in monitors and TVs, but the ones in TVs can reach a peak brightness of up to 3,000 nits. That’s matching LG’s new META 2.0 WRGB OLED panels (with Micro Lens Array technology) in peak brightness. Samsung says that it achieved this using advanced panel drive technology and AI (Artificial Intelligence). Moreover, compared to last year’s QD-OLED panels, this year’s panels have 50% higher brightness in red, green, and blue colors, which should mean deeper colors and more effective HDR performance.

The company partnered with the well-known color expert firm Pantone to ensure accurate color representation. At Samsung Display’s CES 2024 booth, attendees can compare the colors on the QD-OLED TV panels with Pantone’s color chips to see if they’re accurate. A Samsung Display representative said, “This is the year that Samsung Display’s QD-OLEDs prove its unrivaled superiority in picture quality and establish themselves as ‘monitor heroes.’”

55-inch, 75-inch, and 77-inch versions of some new Samsung QD-OLED TVs use these newer QD-OLED panels. They have up to a 144Hz refresh rate, HDR10+ Adaptive, and AMD FreeSynch Premium Pro. However, Samsung TVs with these panels aren’t quoting 3,000 nits peak brightness figures. Samsung’s TV manufacturing unit is only quoting a 20% increase in peak brightness compared to last year. This means we should be looking at something around 1,800 nits of peak brightness. Remember, Samsung Display (display panel manufacturing firm) and Samsung Visual Display (TV and monitor manufacturing) are two different firms and can have different goals. So, Samsung Visual Display buys QD-OLED panels from Samsung Display, but may decide not to use the full potential of those panels in terms of brightness and limit them as per their design strategies.

Samsung also announced the world’s first OLED monitor panels with up to 360Hz refresh rate. The company launched 27-inch and 31.5-inch monitors. The 31.5-inch QD-OLED monitor panel has a 240Hz refresh rate and 4K resolution, bringing the pixel density to 140ppi. That is equivalent to watching content on a 65-inch 8K TV. The 27-inch panel has QHD+ resolution and a 360Hz refresh rate.

These QD-OLED panels will likely be used by several PC brands, including Alienware, ASUS, HP, MSI, and even Samsung.

As reported by CNET,

C Seed makes incredibly expensive custom-built televisions for the ultra-wealthy, and the N1 is its latest offering. The 137-inch version is one of two N1 models in existence.

The most incredible thing about the N1 is the way it folds into a compact rectangular chunk when not in use. Press a button and the screen divides into parts that slowly butterfly together, then descend into the rectangle, hiding the screen entirely — a process that takes about 2 and a half minutes. The folded N1 looks more like a solid metal bench than a TV.

Unfolded, the N1 has a stunning, bright, seamless picture, and the screen can rotate 180 degrees. The divisions between the different sections of the screen were invisible to my eye. That’s because the company uses a proprietary system it calls Adaptive Gap Calibration. It automatically measures the distance between the edges, uses sensors to detect offsets and calibrates the brightness of adjacent LEDs. I couldn’t detect any seams in the image.

The TV uses micro-LED technology, the same display tech found on Samsung’s The Wall — another massive, super-expensive TV that happens to be C Seed’s major competitor. C Seed says the gigantic 4K resolution screen can achieve 4,000 nits peak brightness, with HDR and wide color gamut. In my brief viewing time with C Seed’s demo material, the picture quality looked great. As with The Wall, I could discern individual pixels when I was very close to the screen, but from any normal seating distance, the image looked smooth and sharp.

As reported by Tom’s Guide,

When it comes to LED-LCD TVs, few things matter more than overall brightness, contrast and color saturation. The new 110-inch Hisense UX Mini-LED TV has all of them in spades.

To be specific, Hisense claims its new TV — coming later this year — can reach a peak brightness of 10,000 nits and uses 40,000 local dimming zones. Sure, OLED can control brightness on a pixel-by-pixel basis, but Hisense’s new monster screen is roughly 10 times as bright while still covering 100% of the DCI-P3 color space.

If specs are meaningless to you, the takeaway here is that the Hisense UX (one of the brightest TVs of 2023) is coming in a new screen size with some serious specs that should have Samsung, LG and TCL shaking in their boots.

As reported by The Verge,

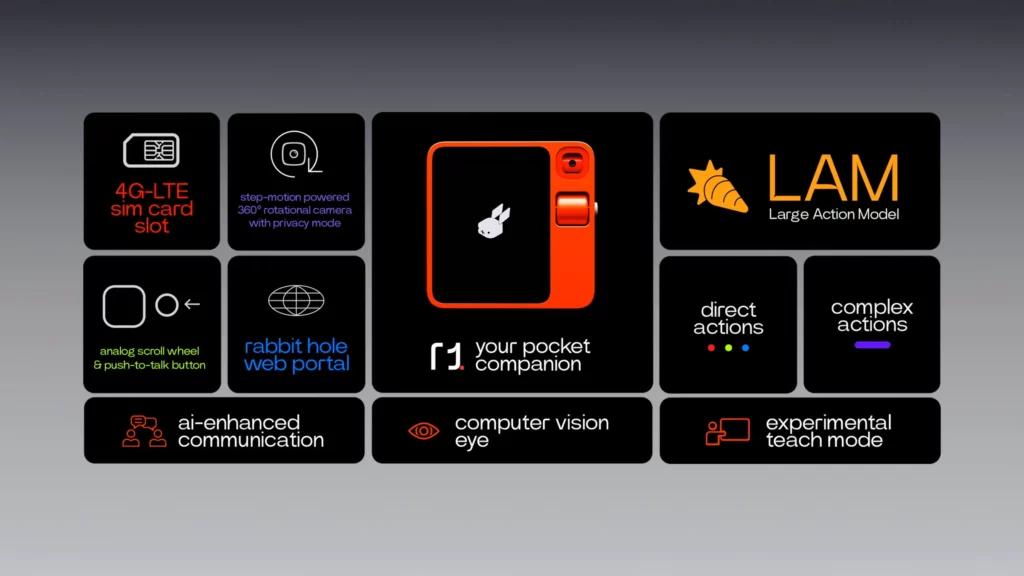

Jesse Lyu, the CEO and founder of an AI startup called Rabbit, says he doesn’t want to replace your smartphone. At least not right away. His company’s new gadget, a $199 standalone AI device called the R1, is so staggeringly ambitious that Lyu seems to think he can’t help but replace your phone at some point. Just not quite yet.

The R1 looks a little like a Playdate console or maybe a modernized version of one of those ’90s-era handheld TVs. It’s a standalone gadget about half the size of an iPhone with a 2.88-inch touchscreen, a rotating camera for taking photos and videos, and a scroll wheel / button you press to navigate around or talk to the device’s built-in assistant. It has a 2.3GHz MediaTek processor, 4GB of memory, and 128GB of storage, all inside a rounded body designed in collaboration with the design firm Teenage Engineering. All Rabbit says about the battery is that it lasts “all day.”

The software inside the R1 is the real story: Rabbit’s operating system, called Rabbit OS, and the AI tech underneath. Rather than a ChatGPT-like large language model, Rabbit says Rabbit OS is based on a “Large Action Model,” and the best way I can describe it is as a sort of universal controller for apps. “We wanted to find a universal solution just like large language models,” he says. “How can we find a universal solution to actually trigger our services, regardless of whether you’re a website or an app or whatever platform or desktop?”

In spirit, it’s an idea similar to Alexa or Google Assistant. Rabbit OS can control your music, order you a car, buy your groceries, send your messages, and more, all through a single interface. No balancing apps and logins — just ask for what you want and let the device deliver. The R1’s on-screen interface will be a series of category-based cards, for music or transportation or video chats, and Lyu says the screen mostly exists so that you can verify the model’s output on your own.

The R1 also has a dedicated training mode, which you can use to teach the device how to do something, and it will supposedly be able to repeat the action on its own going forward. Lyu gives an example: “You’ll be like, ‘Hey, first of all, go to a software called Photoshop. Open it. Grab your photos here. Make a lasso on the watermark and click click click click. This is how you remove watermark.’” It takes 30 seconds for Rabbit OS to process, Lyu says, and then it can automatically remove all your watermarks going forward.

The R1 is available for preorder now, and Lyu says the device will start shipping in March. He even thinks, and maybe hopes, he might beat Humane’s AI Pin to market. Since its announcement at CES, they have already sold over 30,000 units.

As reported by Tom’s Guide,

Nvidia showed off how far its generative AI platform Avatar Cloud Engine (ACE) has come along at CES 2024. In a cyberpunk bar setting, the NPC Nova responded to ACE Senior Product Manager Seth Schneider asking her how she was doing. When Schneider asked the barman Jin for a drink, the AI character promptly served him one.

The company basically just gave us a preview of how we’ll soon be interacting with in-game characters — as if we’re having a conversation with a friend.

Others who had the opportunity to try the demo for themselves had natural conversations with Nova and Jin about different topics. They also asked Jin for some ramen and also if he could dim the lights of the bar — Jin managed both with ease.

The platform works by capturing a player’s speech and then converting it into text for a large language model (LLM) to process in order to generate an NPC’s response. The process then goes into reverse so that the player hears the game character speaking and also sees realistic lip movements that are handled by an animation model.

For this demo, Nvidia collaborated with Convai, a generative AI NPC-creation platform. Convai allows game developers to attribute a backstory to a character and set their voice so that they can take part in interactive conversations. The latest features include real-time character-to-character interaction, scene perception, and actions.

According to Nvidia, the ACE models use a combination of local and cloud resources that transform gamer input into a dynamic character response. The models include Riva Automatic Speech Recognition (ASR) for transcribing human speech, Riva Text To Speech (TTS) to generate audible speech, Audio2Face (A2F) to generate facial expressions and lip movements and the NeMo LLM to understand player text and transcribed voice and generate a response.

In its blog, Nvidia said that open-ended conversations with NPCs open up a world of possibilities for interactivity in games. However, it believes that such conversations should have consequences that could lead to potential actions. This means that NPCs need to be aware of the world around them.

The latest collaboration between Nvidia and Convai led to the creation of new features including character spatial awareness, characters being able to take action based on a conversation, and game characters with the ability to have non-scripted conversations without any interaction from a gamer.

Nvidia said it’s creating digital avatars that use ACE technologies in collaboration with top game developers including Charisma.AI and NetEase Games — the latter of which has already invested $97 million to create its AI-powered MMO, Justice Mobile.

As reported by VideoCardz,

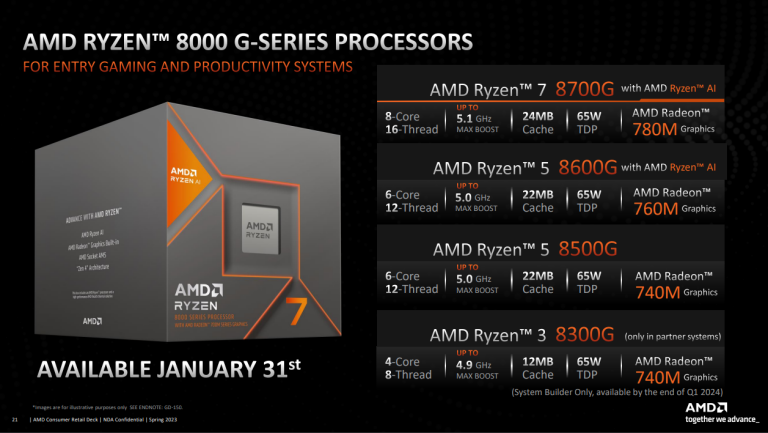

The AM5 socket is set to receive an update with the introduction of the new Ryzen CPUs, marking the debut of the first 8000 desktop series. Despite the nomenclature suggesting a next-generation CPU series, these processors are, in fact, Zen4/RDNA3 mobile silicon presented in a new form factor – as modern desktop APU series. These APUs seamlessly integrate AMD’s latest CPU and GPU architectures, specifically Zen4/Zen4c and RDNA3 graphics.

Formally designated as AMD’s initial processors for the AM5 socket, the Ryzen 8000G series comes equipped with a built-in XDNA1 Ryzen AI accelerator. This claim is applicable to the 8-core Ryzen 7 8700G and 6-core Ryzen 5 8600G models, as they exclusively utilize the larger silicon. In contrast, the 6-core 8500G and 4-core 8300G employ a smaller Phoenix die, omitting the Ryzen AI processor from their configuration. The latter also come with Zen4c cores and 8300G will not be available as DIY part, only through OEMs and system integrators.

In terms of specifications, all SKUs boast a default TDP of 65W and are packaged with integrated RDNA3 graphics, either Radeon 780M (12CU), Radeon 760M (8CU) or Radeon 740M (4CU).

AMD is drawing a comparison between its 8700G and 8600G models and Intel’s Core i7-14700K, which features a built-in GPU based on the Xe-LP architecture. The integrated GPU in the Intel CPU is equipped with UHD Graphics 770, features 32 Execution Units, and it is less powerful than the Xe-LPG architecture found in new Core Ultra 100 “Meteor Lake” series. Intel has not yet released or officially announced a desktop series with Alchemist integrated graphics, making such a comparison relevant.

In practical terms, the Ryzen 8600G is expected to outperform the i7-14700K by a factor ranging from 1.0 times to 3.4 times in 1080p low-detail gaming. The 8700G is also expected to deliver superior performance, with estimates ranging from 1.1 to 4.0 times higher than the i7-14700K.

In a more practical scenario, AMD is comparing its 8700G APU with a system powered by a Core i5-13400F, coupled with a GeForce GTX 1650 desktop graphics card. AMD claims that their solution can deliver gaming performance ranging from 0.89 times to 1.31 times that of the competition, while productivity benchmarks show an improvement of 1.1 times to 4.6 times.

AMD confirms Ryzen 7 8700G will retail at $329, Ryzen 5 8600G will cost $229 and Ryzen 5 8500G will be a $179 desktop APU. The 8300G is not launching through the DIY market; hence it has no official pricing. All SKUs except 8300G will become available on January 31.

As reported by Polygon,

During CES 2024, Nvidia announced its mid-gen refresh of the GeForce RTX 40 series desktop graphics cards, titled the RTX 40 Super series. Three of its GPUs are getting super-fied: the RTX 4070, the 4070 Ti, and the 4080. Across the board, these GPUs are packed with more everything, from shader and ray tracing cores, to the Tensor AI cores that are integral to making its deep learning supersampling (DLSS) graphics feature work. Nvidia’s blog post covering this announcement goes deeper into the improvements, but here’s the quick version.

Each of the three come in at a price that is either the same or slightly cheaper than the original RTX 40 series versions. The lowest-end RTX 4070 Super with 12 GB of GDDR6X video memory is at the same $599.99 price (launching Jan. 17), but its core count puts it much closer to the performance of the RTX 4070 Ti, likely making it a stellar choice for people who want to play games without compromise at 1440p. While its total graphics power has risen to 220 W over the 200 in the RTX 4070, it still requires a 650 W power supply in your PC.

Moving up the rungs, the $799.99 RTX 4070 Ti Super is now much closer to the RTX 4080 in terms of performance (seeing a trend here?) than the original model was. That’s due to a boost in cores, plus a jump from 12 GB to 16 GB of video memory. Power requirements are virtually identical between the new and old 4070 Ti versions, requiring at least a 700 W power supply. It’s tough to imagine this card struggling to run anything in 1440p well — or in 4K, depending on the title. This one will debut on Jan. 24.

Finally, the RTX 4080 Super is as super as it gets, currently. It’s a similar story for this new $999.99 4K-ready graphics card, with more cores, a minor boost in clock speed, with minor power savings while gaming compared to the original RTX 4080. However, its price is $200 cheaper than the launch price for the RTX 4080. You’ll need a 750 W power supply to use this massive 3-slot GPU. This graphics card will launch on Jan. 31.

As reported by Ars Technica,

Four years ago at CES 2020, one of Samsung’s quirkier little projects was “Ballie,” a cute little ball robot that would wheel around the house, stream camera footage, and act as a roving smart speaker. The coolest thing about 2020 Ballie was that it would have looked right at home on the set of a Star Wars movie—it was a ball droid, where the wheels were integrated into the spherical body. At CES 2024, Ballie is back with a new design, and according to a report from The Washington Post, it will reportedly hit the market sometime this year. Although much like a concept car being watered down to make it to production, the 2024 Ballie lost much of the prototype’s cute appeal.

The 2024 Ballie is no longer a ball droid, and instead is a sphere mounted on three wheels, giving it basically the same locomotion as a robot vacuum. The new Ballie is also bigger, growing from about the size of a softball to the size of a bowling ball, and it’s now a two-handed lift. This bigger size can give production Ballie a more practical battery size and make room for a projector. It looks like the sides of the sphere body are stationary (that’s where the wheels are mounted), while the sphere’s center can still rotate up or down, allowing Ballie to aim the camera/projector mounted on the front.

The full suite of features appears to be smart-speaker-style voice commands, smart home controls, remote camera access, person recognition, home navigation, and various projector features. Ballie’s projector can be aimed at the floor, wall, or ceiling and display videos and video calls, mirror a Samsung Galaxy phone, or do mouse and keyboard work, which was powered by either a nearby PC or maybe Samsung Dex. The Washington Post says the projector is 1080p, and there’s enough battery for “two to three hours of continued projector use.”

Samsung’s presentation ended with the plea to “bring home your new companion.” The Washington Post interviewed Kang-il Chung, Samsung’s VP and head of the company’s Future Planning group, who said, “With the way things are progressing, I don’t see any big issue with reaching our goal of releasing it within this year.” If it’s released to consumers, Ballie will battle Amazon’s Astro robot in the nascent home robot market, though that $1,600 robot still isn’t generally available.

As reported by CNET,

The brand new Oro Dog Companion Robot retails for $799 and inventory will begin shipping in April.

The Oro is built with advanced AI, founder Divye Bhutani said as the robot waited obediently beside us at the brand’s CES booth. The smart bot pet nanny is fitted with two-way audio and a video screen so you can interact with your dog remotely. It even allows you to capture images and videos to share with friends.

A built-in dispenser launches treats for your good boy or girl on your command, and a separate automatic food bowl releases food on schedule, or on command if you’re away during your pet’s regular feeding time.

A ball thrower housed in Oro’s middle will play fetch for as long as your canine can keep up — as long as you can train them to bring the ball back. Oro can also navigate the home autonomously using an advanced camera and lidar-based mapping system to tag along with your pup and clue you in on the action.

Oro aims to learn your dog’s behavior patterns and snap into action with soothing music or physical interaction when it senses distress, restlessness or the desire to play. Because distress manifests differently in different dogs, Oro comes programmed to recognize audible and physical signs of distress in 10 of the most popular domestic breeds.

As reported by PetaPixel,

Swarovski, a leading name in high-end binoculars, announced the AX Visio binoculars: the world’s first AI-supported system that is capable of recognizing over 9,000 animal species. They also feature a 13-megapixel sensor and photo/video capture capability.

The AX Visio is a 10x magnification set of binoculars that feature a 112 meter field of view at 1,000 meters and go beyond typical binoculars thanks to a battery-powered set of intelligent features. The company says it aims to assist bird watchers and wildlife enthusiasts in the field through smart, AI-assisted functions as well as the ability to take photos and videos.

Running on a modified version of Android, the binoculars are equipped with AI subject recognition that is able to identify birds and other animals (over 9,000 total species) to help inform users of exactly what they are observing. That information is displayed in the diopters of the binoculars and also embedded in the metadata of any photos that are captured using them.

That’s right, these aren’t just binoculars for observation: the AX Visio is also a camera. The binoculars can capture photos and videos of observations which allows users to revisit those experiences later or share those findings with other enthusiasts. The AX Visio binoculars are equipped with a 13-megapixel camera (4,208 x 3,120 pixels) and can capture videos at three resolutions/frame rates: 640×480, HD 1280×720, and Full HD 1920×1080 at 30 or 60 frames per second.

As reported by Ars Technica,

Gaming hardware has done a lot in the last decade to push a lot of pixels very quickly across screens. But one piece of hardware has always led to complications: the eyeball. Nvidia is targeting that last part of the visual quality chain with its newest G-Sync offering, Pulsar.

Motion blur, when it’s not caused by slow LCD pixel transitions, is caused by “the persistence of an image on the retina, as our eyes track movement on-screen,” as Nvidia explains it. Prior improvements in display tech, like variable rate refresh, Ultra Low Motion Blur, and Variable Overdrive have helped with the hardware causes of this deficiency. The eyes and their object permanence, however, can only be addressed by strobing a monitor’s backlight.

You can’t just set that light blinking, however. Variable strobing frequencies causes flicker, and timing the strobe to the monitor refresh rate—itself also tied to the graphics card output—was tricky. Nvidia says it has solved that issue with its G-Sync Pulsar tech, employing “a novel algorithm” in “synergizing” its variable refresh smoothing and monitor pulsing. The result is that pixels are transitioned from one color to another at a rate that reduces motion blur and pixel ghosting.

Nvidia also claims that Pulsar can help with the visual discomfort caused by some strobing effects, as the feature “intelligently controls the pulse’s brightness and duration.”

To accommodate this “radical rethinking of display technology,” a monitor will need Nvidia’s own chips built in. There are none yet, but the Asus ROG Swift PG27 Series G-Sync and its 360 Hz refresh rate is coming “later this year.” No price for that monitor is available yet.

It’s hard to verify how this looks and feels without hands-on time. PC Gamer checked out Pulsar at CES this week and verified that, yes, it’s easier to read the name of the guy you’re going to shoot while you’re strafing left and right at an incredibly high refresh rate. Nvidia also provided a video, captured at 1,000 frames per second, for those curious.

As reported by The Verge,

OpenAI has publicly responded to a copyright lawsuit by The New York Times, calling the case “without merit” and saying it still hoped for a partnership with the media outlet.

In a blog post, OpenAI said the Times “is not telling the full story.” It took particular issue with claims that its ChatGPT AI tool reproduced Times stories verbatim, arguing that the Times had manipulated prompts to include regurgitated excerpts of articles. “Even when using such prompts, our models don’t typically behave the way The New York Times insinuates, which suggests they either instructed the model to regurgitate or cherry-picked their examples from many attempts,” OpenAI said.

OpenAI claims it attempted to reduce regurgitation from its large language models and that the Times refused to share examples of this reproduction before filing the lawsuit. It said the verbatim examples “appear to be from year-old articles that have proliferated on multiple third-party websites.” The company did admit that it took down a ChatGPT feature, called Browse, that unintentionally reproduced content.

However, the company maintained its long-standing position that in order for AI models to learn and solve new problems, they need access to “the enormous aggregate of human knowledge.” It reiterated that while it respects the legal right to own copyrighted works — and has offered opt-outs to training data inclusion — it believes training AI models with data from the internet falls under fair use rules that allow for repurposing copyrighted works. The company announced website owners could start blocking its web crawlers from accessing their data on August 2023, nearly a year after it launched ChatGPT.

The company recently made a similar argument to the UK House of Lords, claiming no AI system like ChatGPT can be built without access to copyrighted content. It said AI tools have to incorporate copyrighted works to “represent the full diversity and breadth of human intelligence and experience.”

But OpenAI said it still hopes it can continue negotiations with the Times for a partnership similar to the ones it inked with Axel Springer and The Associated Press. “We are hopeful for a constructive partnership with The New York Times and respect its long history,” the company said.

“The blog concedes that OpenAI used the Times’s work, along with the work of many others, to build ChatGPT. As the Time’s complain states, ‘through Microsoft’s Bing Chat (recently rebranded as Copilot) and OpenAI’s ChatGPT, defendants seek to free-ride on the Time’s massive investment in its journalism by using it to build substitutive products without permission or payment,’” Ian Crosby, partner at Susman Godfrey and lead counsel for the Times, said in a statement sent to The Verge. “That’s not fair use by any measure.”