All of my opinions are italicized and sources are in blue.

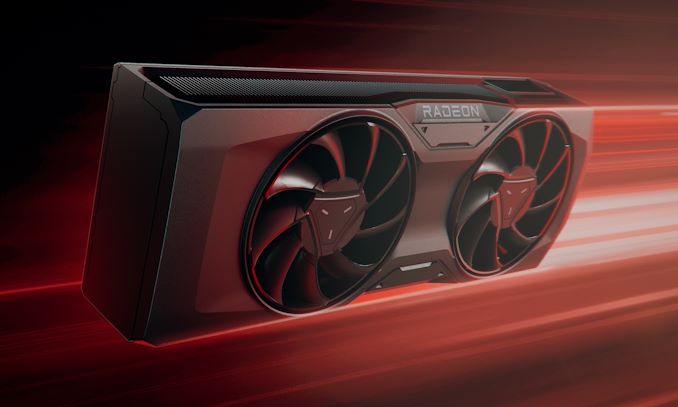

AMD launched its’ RDNA 3 architecture and RX 7000-series GPUs last December. The first GPUs to be released were the flagship Radeon RX 7900 XT and 7900 XTX. In May, AMD skipped their mid tier GPUs and went straight for the RX 7600. Now after months of waiting, the 7800 XT and 7700 XT have been announced and expected to arrive on September 6, 2023.

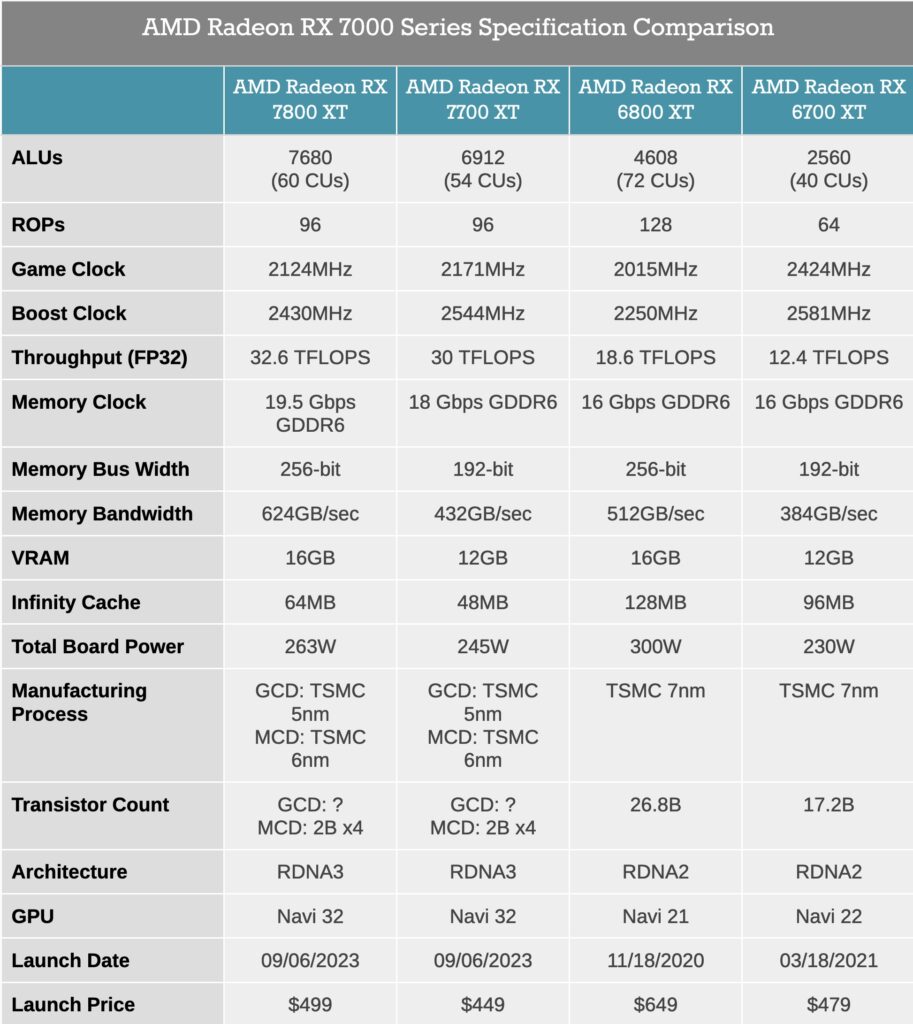

The spec sheet above, taken from anandtech, shows the specs compared to the last generation 6800 XT and 6700 XT. While not shown in this spec sheet, the newer generation GPUs have less GPU shader cores and ray tracing cores compared to last gen. It sounds bad on paper but in reality, the newer GPU architecture would make more of a difference than core count.

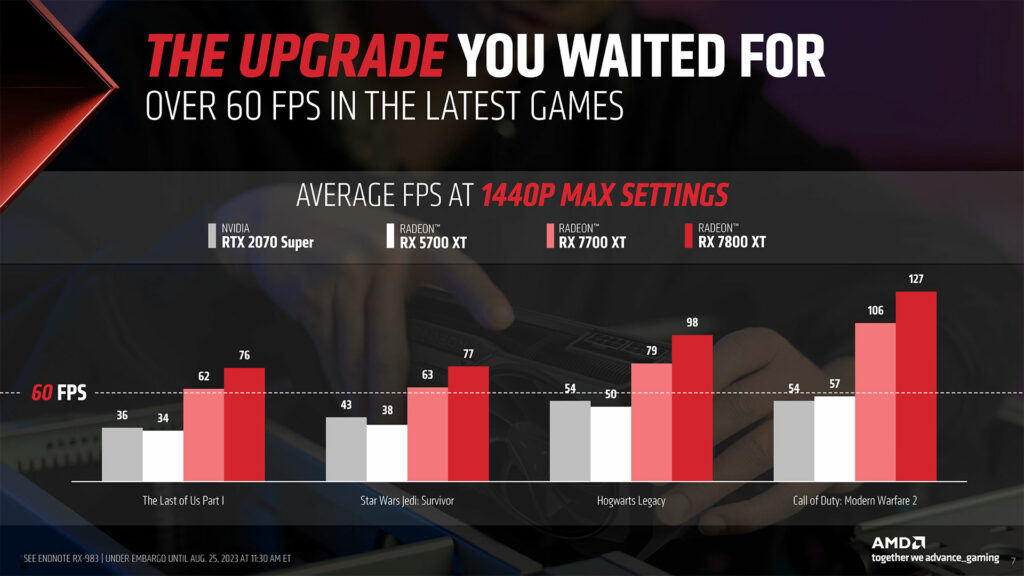

While you should never trust manufactured provided performance graphs, it is still interesting to look at them. Instead of looking at what they showed us, let’s look at what they didn’t show us. AMD’s last generation cards are not even mentioned in the graphs or in the entire presentation. The 5700 XT was released 4 years ago and should not have been included. Looking back at older reviews reveals that the 7700Xt is about 10% faster compared to the 6700 XT. Further analysis is required from reviews to truly compare the two GPUs.

In addition to the cards themselves, AMD will also launch FSR 3 on September 6th. The latest version of its upscaling tech adds frame generation, which AMD is calling it “Fluid Motion Frames”. This uses machine learning to imagine a new frame between existing ones, like Nvidia’s DLSS 3.0.

While FSR still works on rivals’ graphics cards, as long as game developers add the tech to their games, AMD will also be adding frame generation to its driver at an unspecified date. That way, you’ll be able to inject extra frames into any DX10 or DX11 game with your AMD graphics card, no developer support required.

Again, further analysis is required before any buying decisions can be made.

The PlayStation Portal is a $200 handheld device that puts PS5 games in the palm of your hand… as long as you’re on Wi-Fi. Previously known as Project Q, Portal uses the PS5’s remote play feature to stream games from your console. Importantly, the Portal also offers all the features and ergonomics of the PS5’s excellent DualSense controller, including haptic feedback, adaptive triggers, and its touchpad.

To be clear here, Portal isn’t doing anything inherently new. Remote Play has existed since the PS3 era, allowing you to stream games from your console to a handheld, smartphone, tablet, PC, and Mac. That said, Remote Play has never really been an ideal experience – you’re either compromising on visual quality, control setup, ergonomics, or all of the above. You can make it work, but it’s not super convenient.

The DualSense grips feel exactly like a regular DualSense controller, and all of their best features are available here. Beyond all the regular DualSense inputs, there’s a USB-C port for charging, a 3.5mm jack for wired headphones, and a small pair of speaker grilles if you don’t want to use headphones. Connectivity-wise, it supports Wi-Fi and PlayStation Link but no Bluetooth. The only wireless headphones that work are Sony’s first party Pulse Elite headset ($150) and Pulse Explore wireless earbuds ($200).

The only use case I see this being needed is when someone else is using your TV and you want to play your PS5. It doesn’t make sense for anything else. It works best when you are a few feet from your console. So, since you’re already there, why not just play directly on the console for a better experience.

As reported by XDA-Developers,

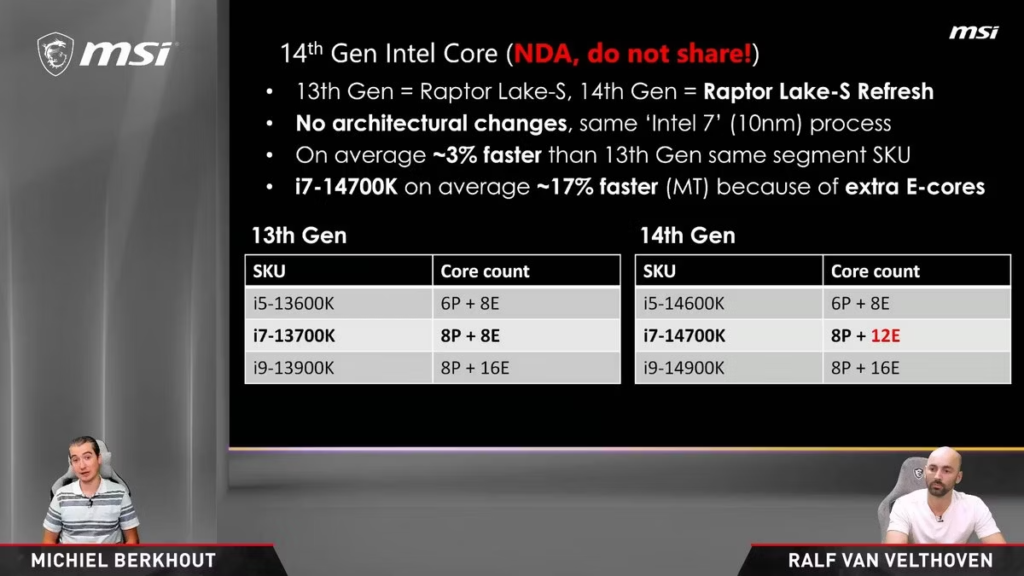

Intel is expected to announce its 14th-gen Core ‘Raptor Lake Refresh’ CPUs later this year as a follow-up to last year’s Raptor Lake lineup. Various leaks over the past few months have revealed quite a bit about the upcoming processors, but the latest info about them comes straight from Taiwanese motherboard manufacturer MSI, confirming several key details, such as the names and core counts of multiple chips in the next-gen family.

The leaked information comes from an unlisted MSI video on YouTube (via @DarkmontTech), which discusses some of the key details about Intel’s upcoming CPUs. While the video has since been taken private, it reveals that the Raptor Lake Refresh lineup will use the Intel 7 (10nm) process node and support higher DDR5 frequencies. Most notably, the next-gen chips will apparently only be 3 percent faster than their predecessors, with the i7-14700K offering on average up to 17 percent higher performance than the 13700K in multi-threaded applications.

The performance boost for the 14700K will be on account of four additional ‘Efficient’ cores in the new chip, which is said to come with 8 Performance cores and 12 Efficient cores, compared to the 8P+8E configuration of the 13700K. Both the i5-14600K and the i9-14900K, however, are expected to retain the same core counts as their predecessors. While the former is set to ship with the same 6P+8E configuration as the i5-13600K, the latter will reportedly come with 8 P cores and 16 E cores, the same as the i9-13900K.

According to earlier leaks, the new chips will retain the same architecture as the Raptor Lake lineup, meaning they will have the same Raptor Cove P-Cores + Gracemont E-Cores as their predecessors. They will be based on the 10nm++ process node, with at least one of the higher-end chips expected to hit 6GHz+ frequencies. The faster performance, however, will come at a cost, as the top-end Raptor Lake Refresh chips could have a 300W+ TDP. On the positive side, they will be compatible with existing LGA 1700 / 1800 motherboards, meaning users will be able to easily upgrade their processor without changing their entire setup.

Paralysis had robbed the two women of their ability to speak. For one, the cause was amyotrophic lateral sclerosis, or ALS, a disease that affects the motor neurons. The other had suffered a stroke in her brain stem. Though they can’t enunciate clearly, they remember how to formulate words.

Now, after volunteering to receive brain implants, both are able to communicate through a computer at a speed approaching the tempo of normal conversation. By parsing the neural activity associated with the facial movements involved in talking, the devices decode their intended speech at a rate of 62 and 78 words per minute, respectively—several times faster than the previous record. Their cases are detailed in two papers published Wednesday by separate teams in the journal Nature.

“It is now possible to imagine a future where we can restore fluid conversation to someone with paralysis, enabling them to freely say whatever they want to say with an accuracy high enough to be understood reliably,” said Frank Willett, a research scientist at Stanford University’s Neural Prosthetics Translational Laboratory, during a media briefing on Tuesday. Willett is an author on a paper produced by Stanford researchers; the other was published by a team at UC San Francisco.

While slower than the roughly 160-word-per-minute rate of natural conversation among English speakers, scientists say it’s an exciting step toward restoring real-time speech using a brain-computer interface, or BCI. “It is getting close to being used in everyday life,” says Marc Slutzky, a neurologist at Northwestern University who wasn’t involved in the new studies.

In the second paper, researchers at UCSF built a BCI using an array that sits on the surface of the brain rather than inside it. A paper-thin rectangle studded with 253 electrodes, it detects the activity of many neurons across the speech cortex. They placed this array on the brain of a stroke patient named Ann and trained a deep-learning model to decipher neural data it collected as she moved her lips without making sounds. Over several weeks, Ann repeated phrases from a 1,024-word conversational vocabulary.

There are trade-offs to both group’s approaches. Implanted electrodes, like the ones the Stanford team used, record the activity of individual neurons, which tends to provide more detailed information than a recording from the brain’s surface. But they’re also less stable, because implanted electrodes shift around in the brain. Even a movement of a millimeter or two causes changes in recorded activity. “It is hard to record from the same neurons for weeks at a time, let alone months to years at a time,” Slutzky says. And over time, scar tissue forms around the site of an implanted electrode, which can also affect the quality of a recording.

On the other hand, a surface array captures less detailed brain activity but covers a bigger area. The signals it records are more stable than the spikes of individual neurons since they’re derived from thousands of neurons, Slutzky says.