As reported by The Verge,

Google has released Gemma 2B and 7B, a pair of open-source AI models that let developers use the research that went into its flagship Gemini more freely. While Gemini is a big closed AI model that directly competes with (and is nearly as powerful as) OpenAI’s ChatGPT, the lightweight Gemma will likely be suitable for smaller tasks like simple chatbots or summarizations.

But what these models lack in complication, they may make up for in speed and cost of use. Despite their smaller size, Google claims Gemma models “surpass significantly larger models on key benchmarks” and are “capable of running directly on a developer laptop or desktop computer.” They will be available via Kaggle, Hugging Face, Nvidia’s NeMo, and Google’s Vertex AI.

Gemma’s release into the open-source ecosystem is starkly different from how Gemini was released. While developers can build on Gemini, they do that either through APIs or by working on Google’s Vertex AI platform. Gemini is considered a closed AI model. By making Gemma open source, more people can experiment with Google’s AI rather than turn to competitors that offer better access.

Both model sizes will be available with a commercial license regardless of organization size, number of users, and the type of project. However, Google — like other companies — often prohibits its models from being used for specific tasks such as weapons development programs.

Gemma will also ship with “responsible AI toolkits,” as open models can be harder to place guardrails in than more closed systems like Gemini. Tris Warkentin, product management director at Google DeepMind, said the company undertook “more extensive red-teaming to Gemma because of the inherent risks involved with open models.”

The responsible AI toolkit will allow developers to create their own guidelines or a banned word list when deploying Gemma to their projects. It also includes a model debugging tool that lets users investigate Gemma’s behavior and correct issues.

The models work best for language-related tasks in English for now, according to Warkentin. “We hope we can build with the community to address market needs outside of English-language tasks,” he told reporters.

Developers can use Gemma for free in Kaggle, and first-time Google Cloud users get $300 in credits to use the models. The company said researchers can apply for up to $500,000 in cloud credits.

As reported by Reuters,

Nvidia hit $2 trillion in market value on Friday, riding on an insatiable demand for its chips that made the Silicon Valley firm the pioneer of the generative artificial intelligence boom.

The milestone followed another bumper revenue forecast from the chip designer that drove up its market value by $277 billion on Thursday – Wall Street’s largest one-day gain on record.

Its rapid ascent in the past year has led analysts to draw parallels to the picks and shovels providers during the gold rush of 1800s as Nvidia’s chips are used by almost all generative AI players from OpenAI to Google.

That has helped the company vault from $1 trillion to $2 trillion market value in just around nine months – the fastest among U.S. companies and in less than half the time it took tech giants Apple and Microsoft.

“For AI companies today – the leaders of the sector – what’s going to be binding for them is not going to be demand. It’s just going to be their capacity to answer the surging demand,” said Ipek Ozkardeskaya, senior analyst at Swissquote Bank.

As reported by CNBC,

Social media company Reddit filed its IPO prospectus with the Securities and Exchange Commission on Thursday after a yearslong run-up. The company plans to trade on the New York Stock Exchange under the ticker symbol “RDDT.”

Its market debut, expected in March, will be the first major tech initial public offering of the year. It’s the first social media IPO since Pinterest went public in 2019.

Reddit said it had $804 million in annual sales for 2023, up 20% from the $666.7 million it brought in the previous year, according to the filing. The social networking company’s core business is reliant on online advertising sales stemming from its website and mobile app.

Reddit said that by 2027 it estimates the “total addressable market globally from advertising, excluding China and Russia, to be $1.4 trillion.” Reddit said the current addressable advertising market is $1.0 trillion, sans China and Russia.

The company is building on its search capabilities and plans to “more fully address the $750 billion opportunity in search advertising that S&P Global Market Intelligence estimates the market to be in 2027.”

Reddit said it plans to use artificial intelligence to improve its ad business and that it expects to open new revenue channels by offering tools and incentives to “drive continued creation, improvements, and commerce.“

It’s also in the early stages of developing and monetizing a data-licensing business in which third parties would be allowed to access and search data on its platform.

For example, Google on Thursday announced an expanded partnership with Reddit that will give the search giant access to the company’s data to, among other uses, train its AI models.

Reddit said that its non-employed moderators, known as Redditors, can participate in the company’s IPO offering through its “directed share program.” Because of this, Reddit said there’s a possibility of “individual investors, retail or otherwise constituting a larger proportion of the investors participating in this offering than is typical for an initial public offering.” Reddit said it had an average of more than 60,000 daily active moderators in December 2023.

Reddit first filed a confidential draft of its public offering prospectus with the Securities and Exchange Commission in December 2021. The company has an employee headcount of 2,013 as of December 31, 2023, which was up from 1,942 during the previous year.

Reddit has raised about $1.3 billion in funding and has a post valuation of $10 billion, according to deal-tracking service PitchBook. Publishing giant Condé Nast bought Reddit in 2006. Reddit spun out of Conde Nast’s parent company, Advance Magazine Publishers, in 2011.

Advance now owns 34% of voting power. Other notable shareholders include Tencent and Sam Altman, CEO of startup OpenAI.

As reported by Ars Technica,

The European Commission (EC) is concerned that TikTok isn’t doing enough to protect kids, alleging that the short-video app may be sending kids down rabbit holes of harmful content while making it easy for kids to pretend to be adults and avoid the protective content filters that do exist.

The allegations came Monday when the EC announced a formal investigation into how TikTok may be breaching the Digital Services Act (DSA) “in areas linked to the protection of minors, advertising transparency, data access for researchers, as well as the risk management of addictive design and harmful content.”

“We must spare no effort to protect our children,” Thierry Breton, European Commissioner for Internal Market, said in the press release, reiterating that the “protection of minors is a top enforcement priority for the DSA.”

This makes TikTok the second platform investigated for possible DSA breaches after Twitter came under fire last December. Both are being scrutinized after submitting transparency reports in September that the EC said failed to satisfy the DSA’s strict standards on predictable things like not providing enough advertising transparency or data access for researchers.

But while X is additionally being investigated over alleged dark patterns and disinformation—following accusations last October that X wasn’t stopping the spread of Israel/Hamas disinformation—it’s TikTok’s young user base that appears to be the focus of the EC’s probe into its platform.

“As a platform that reaches millions of children and teenagers, TikTok must fully comply with the DSA and has a particular role to play in the protection of minors online,” Breton said. “We are launching this formal infringement proceeding today to ensure that proportionate action is taken to protect the physical and emotional well-being of young Europeans.”

Likely over the coming months, the EC will request more information from TikTok, picking apart its DSA transparency report. The probe could require interviews with TikTok staff or inspections of TikTok’s offices.

Upon concluding its investigation, the EC could require TikTok to take interim measures to fix any issues that are flagged. The Commission could also make a decision regarding non-compliance, potentially subjecting TikTok to fines of up to 6 percent of its global turnover.

An EC press officer, Thomas Regnier, reported that the Commission suspected that TikTok “has not diligently conducted” risk assessments to properly maintain mitigation efforts protecting “the physical and mental well-being of their users, and the rights of the child.”

In particular, its algorithm may risk “stimulating addictive behavior,” and its recommender systems “might drag its users, in particular minors and vulnerable users, into a so-called ‘rabbit hole’ of repetitive harmful content,” Regnier reported Further, TikTok’s age verification system may be subpar, with the EU alleging that TikTok perhaps “failed to diligently assess the risk of 13-17-year-olds pretending to be adults when accessing TikTok,” Regnier said.

To better protect TikTok’s young users, the EU’s investigation could force TikTok to update its age-verification system and overhaul its default privacy, safety, and security settings for minors.

“In particular, the Commission suspects that the default settings of TikTok’s recommender systems do not ensure a high level of privacy, security, and safety of minors,” Regnier said. “The Commission also suspects that the default privacy settings that TikTok has for 16-17-year-olds are not the highest by default, which would not be compliant with the DSA, and that push notifications are, by default, not switched off for minors, which could negatively impact children’s safety.”

TikTok could avoid steep fines by committing to remedies recommended by the EC at the conclusion of its investigation.

Regnier reported that the EC does not comment on ongoing investigations, but its probe into X has spanned three months so far. Because the DSA does not provide any deadlines that may speed up these kinds of enforcement proceedings, ultimately, the duration of both investigations will depend on how much “the company concerned cooperates,” the EU’s press release said.

A TikTok spokesperson reported that TikTok “would continue to work with experts and the industry to keep young people on its platform safe,” confirming that the company “looked forward to explaining this work in detail to the European Commission.”

“TikTok has pioneered features and settings to protect teens and keep under-13s off the platform, issues the whole industry is grappling with,” TikTok’s spokesperson said.

All online platforms are now required to comply with the DSA, but enforcement on TikTok began near the end of July 2023. A TikTok press release last August promised that the platform would be “embracing” the DSA. But in its transparency report, submitted the next month, TikTok acknowledged that the report only covered “one month of metrics” and may not satisfy DSA standards.

“We still have more work to do,” TikTok’s report said, promising that “we are working hard to address these points ahead of our next DSA transparency report.”

As reported by Ars Technica,

Wyze cameras experienced a glitch on Friday that gave 13,000 customers access to images and, in some cases, video, from Wyze cameras that didn’t belong to them. The company claims 99.75 percent of accounts weren’t affected, but for some, that revelation doesn’t eradicate feelings of “disgust” and concern.

Wyze claims that an outage on Friday left customers unable to view camera footage for hours. Wyze has blamed the outage on a problem with an undisclosed Amazon Web Services (AWS) partner but hasn’t provided details.

Monday morning, Wyze sent emails to customers, including those Wyze says weren’t affected, informing them that the outage led to 13,000 people being able to access data from strangers’ cameras, as reported by The Verge.

Per Wyze’s email:

We can now confirm that as cameras were coming back online, about 13,000 Wyze users received thumbnails from cameras that were not their own and 1,504 users tapped on them. Most taps enlarged the thumbnail, but in some cases an Event Video was able to be viewed. …

According to Wyze, while it was trying to bring cameras back online from Friday’s outage, users reported seeing thumbnails and Event Videos that weren’t from their own cameras. Wyze’s emails added:

The incident was caused by a third-party caching client library that was recently integrated into our system. This client library received unprecedented load conditions caused by devices coming back online all at once. As a result of increased demand, it mixed up device ID and user ID mapping and connected some data to incorrect accounts.

In response to customers reporting that they were viewing images from strangers’ cameras, Wyze said it blocked customers from using the Events tab, then made an additional verification layer required to access the Wyze app’s Event Video section. Wyze co-founder and CMO David Crosby also said Wyze logged out people who had used the Wyze app on Friday in order to reset tokens.

Wyze’s emails also said the company modified its system “to bypass caching for checks on user-device relationships until [it identifies] new client libraries that are thoroughly stress tested for extreme events” like the one that occurred on Friday.

This is the second time that something like this has happened to Wyze customers in five months. In September, some Wyze users reported seeing feeds of cameras that they didn’t own via Wyze’s online viewer. Wyze claimed that for 40 minutes, as many as 2,300 people who were logged in to the online viewer may have been able to see 10 strangers’ feeds. The company blamed this on a “web caching issue” and said that it deployed “numerous technical measures” to prevent the problem from repeating, including limiting account permissions, updating company policies and employee training, and hiring an external security firm for penetration testing.

In 2022, security firm Bitdefender disclosed security vulnerabilities with Wyze cameras that could allow people to access feeds from cameras they didn’t own and the contents of strangers’ camera SD cards. The vulnerability required the hacker to have been on the same network as the hacked device at some point; however, long-time users still disowned Wyze for not acting on this information or making the information public for years. In March, Wyze settled [PDF] a proposed class action regarding the vulnerabilities; terms weren’t disclosed.

Wyze’s story is another painful reminder of the inherent risks in putting Internet-connected video cameras in sensitive parts of the home, especially inside. Wyze tried placing some technical blame on an AWS partner, but that’s not comforting considering that Wyze is the one that chose that partner and is responsible for ensuring its tech is implemented properly (AWS didn’t report an outage at the time of Wyze’s camera outage). At a minimum, this incident can be a reminder that you should research the companies behind smart products when considering a security system for any alarming security breaches, flaws, glitches, and checkered pasts that you’d rather not experience personally.

As reported by The Guardian,

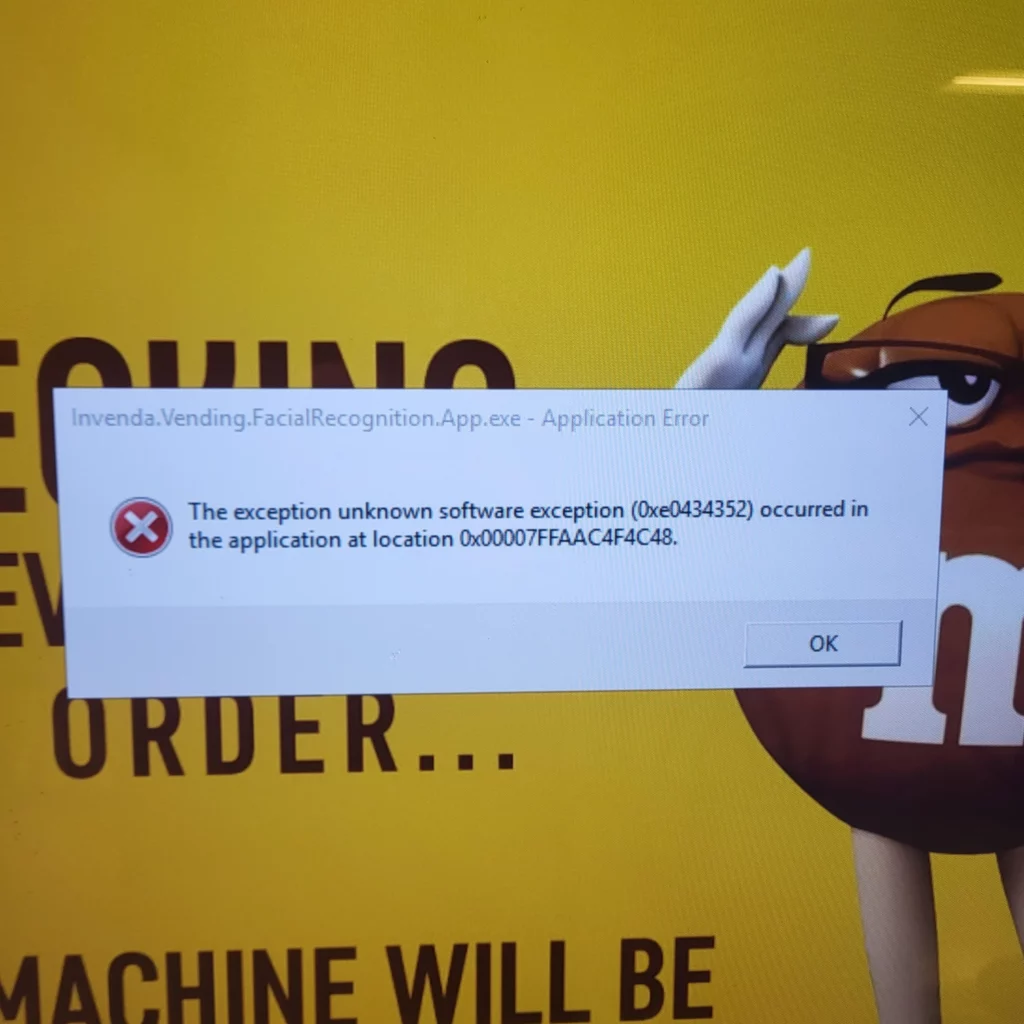

A malfunctioning vending machine at a Canadian university has inadvertently revealed that a number of them have been using facial recognition technology in secret.

Earlier this month, a snack dispenser at the University of Waterloo showed an error message – Invenda.Vending.FacialRecognition.App.exe – on the screen.

There was no prior indication that the machine was using the technology, nor that a camera was monitoring student movement and purchases. Users were not asked for permission for their faces to be scanned or analyzed.

“We wouldn’t have known if it weren’t for the application error. There’s no warning here,” River Stanley, who reported on the discovery for the university’s newspaper, told CTV News.

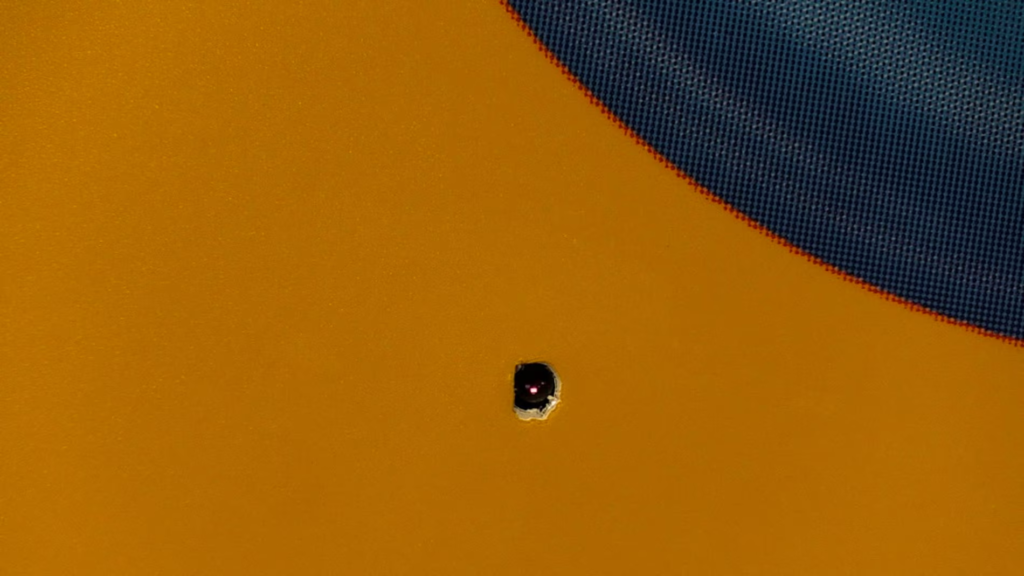

Invenda, the company that produces the machines, advertises its use of “demographic detection software”, which it says can determine gender and age of customers. It claims the technology is compliant with GDPR, the European Union’s privacy standards, but it is unclear whether it meets Canadian equivalents.

In April, the national retailer Canadian Tire ran afoul of privacy laws in British Columbia after it used facial recognition technology without notifying customers. The government’s privacy commissioner said that even if the stores had obtained permission, the company failed to show a reasonable purpose for collecting facial information.

The University of Waterloo pledged in a statement to remove the Invenda machines “as soon as possible”, and that in the interim, it had “asked that the software be disabled”.

In the meantime, students at the Ontario university responded by covering the hole that they believe houses the camera with gum and paper.

You can play Doom on Husqvarna robot lawnmower

Intuitive Machines’ Odysseus is first US lander on the moon since 1972

Sony testing PC compatibility for PSVR2

Uncontrolled European satellite falls to Earth after 30 years in orbit

Switch successor is now set for early 2025

NVIDIA is combing Nvidia Control Panel and GeForce Experience